Executing Successful Surveys

Surveys have been around forever and come in many different lengths, formats, and intentions. Smaller surveys, while potentially less informative, can be done on-the-fly and help detect a change in sentiment about something specific. Longer surveys may take time for your customers to complete, but also have the advantage of providing more details, and correlations between responses. How and when you present them should be considered in advance and depends on your desired outcomes.

We participate in surveys sometimes without even thinking about it. Quick surveys are typically used to assess one specific aspect of your experience and are fast and unintrusive. Those ratings you give on your mobile apps, or when a cashier at a store asks:

“Did you find everything you were looking fortoday?”

...are just a couple examples of how surveys are integrated seamlessly into our daily lives.

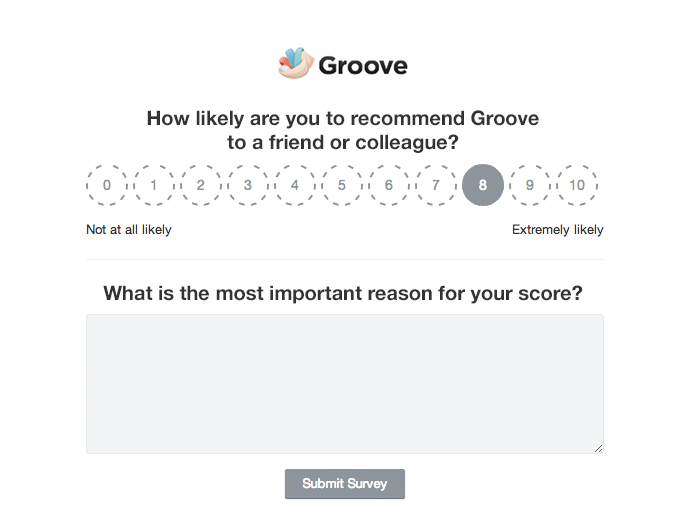

When using these short surveys, assess if the rating itself is useful or if you require more context on the answer. As a best practice, always include a “Why?” question after the main question. It may not always get a response, but if you don’t ask it, you lose the opportunity for valuable data and make the results more difficult to take action on. In the supermarket example, it is good to learn that someone struggled to find some product, but knowing it was specifically the canned tomatoes is much more useful. You can make an impactful change based on that detail.

Source: KissPNG

The ratings, along with any reasoning given, should be tracked in a common location or tool so you can analyze trends against a user, or a grouped set of users such as by company, demographic, or location. Watching these trends change as you tweak your offering could help you correct a misstep more quickly.

Net Promoter Score

Net Promoter Score (NPS) is an example of a quick survey. NPS helps to measure loyalty and sentiment around your brand or a specific product or service. It is presented as a question, on either a 0-10 or 1-10 point scale.

“How likely are you to recommend our product to a family member orcolleague?”

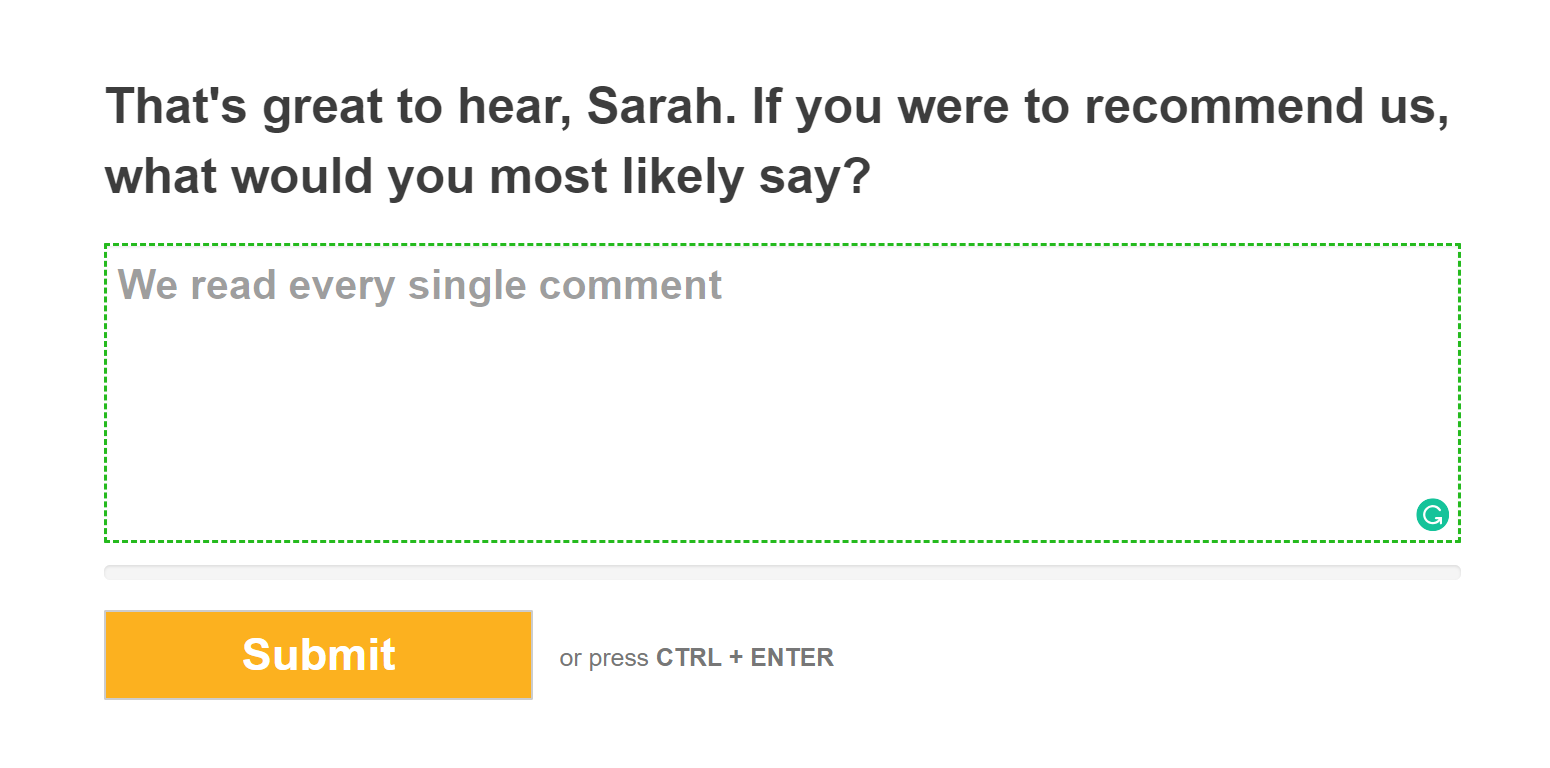

Depending on what you would like to measure, as Rentently points out, you can adjust the question to different levels of specificity. For a broader scope, replace 'our product' with 'our company' or to be more specific replace with 'our support team' or 'this feature'.

As mentioned above, no matter what you are measuring, you should always include a follow-up question. Shep Hyken highlights the importance of learning from the NPS rating:

Two of my favorite questions to follow the standard one-to-10 survey are to ask, “Why?”, and if the number is lower than a 10, “What would it take to raise our score just by one point?”[...] That is important feedback that any company canuse.

It isn’t just relevant that the customer will or won’t recommend you, it is essential to know why. With this information, you can continue doing things that create promoters and stop doing things that breed detractors.

There are two main ways to use NPS surveys: fixed interval, or in-product (also known as a real-time request).

At a fixed interval, you would send a notice to a set of your users to ask this question. This could be through email or as part of a larger survey. You would trend the results over time and report fluctuations over the fixed intervals. This methodology is useful when you wish to focus on overall sentiment in the marketplace or quarterly planning inputs. A downside to this method is that scheduling the same users to get the same question, at the same cadence can lead to fatigue and the response rate may drop. It’s best to do this type of survey on a different, but comparable, group of users each time to avoid this problem.

In-product surveys should be set up based on key milestones. Rules can be created that align with whom you want to ask, when you want to ask them, and how frequently. For example, you may want to ask new users after their first few actions in your product, or users who login more than a certain frequency within a given time period, or users that reach a significant threshold of data. It is highly product dependent, but the key is that quick surveys work best when random ized. NPS surveys should not feel algorithmic to the end user, as to not be tied to any noticeably specific action or pattern of behavior. You do not, for example, want to ask a consumer to rate your application or company every time they access it, or once per week. In software, you can use a tool such as Pendo to integrate quick questions into the natural workflow of your product and can specify rules such as who does get the survey and who does not. This capability gives the sensation of randomness and not being repetitive, while still assessing the actions you want.

Software offerings such as Retently or Nicereply have NPS specific features that can help you to track these trends, monitor ownership and actions stemming from the responses, and make visualization easier.

Long Form Surveys

Long form customer surveys cover a wider scope of your products and services. These surveys are expected to be broader, but it remains important to keep them as brief as possible. Too long, and your customers may not want to take the time to respond. Too short, and you won’t get the value from the time that is taken to set them up and respond. When deciding if a question is necessary, there are four questions you should ask yourself that GrooveHQ outlines:

- Does this question have a strong reason for being in this survey?

- Is it tied very closely to the topic of this survey?

- Is it background information/other noise that I don’t actually need to know?

- Is it something that is going to actually help me analyze the survey results and make any useful conclusions?

This list is good at paring down just to the information you need to build a strong set of survey questions. You do not want to waste the respondent’s time with questions that won’t help you improve.

Your survey should always start with a general satisfaction question as an anchor metric you can view as a trend over time and user segment. Something such as:

“Overall, how satisfied are you with our [Product/Company]?”Alternatively, you could replace this with the NPS question. Either way, it is crucial to gauge a user’s overall opinion before diving into specifics. This overall satisfaction question is useful when it is compared to the rest of the responses. You can then gauge what your satisfied customers like or dislike compared to your dissatisfied customers. If they dislike the same things, you have clearly identified an area to improve. However, if they dislike different aspects of your product or service, you may have identified a value proposition gap or some other problem that only affects a portion of your customers.

An example where this helps is if your satisfied customers think your product is simple to use, and your dissatisfied customers think it is difficult to use. You have just found a gap! Digging deeper into the data, you might find a segment of your customers needs a different onboarding process, or your knowledge center is lacking important details or is written for a technical audience or the wrong industry. The insight that a specific segment needs to be handled differently wouldn’t have been noticed if you hadn’t analyzed the correlation between satisfaction scores and how easy customers find your product to use.

Once you understand what types of information you want to collect and for what purposes, it’s time to design the survey itself. When creating a survey, make sure you understand these 2 things:

1. Which question type will give you the data you need to make a good decision?

As Hubspot outlines, there are various types of questions you can use to serve different purposes. A common mistake, for example, is to view Likert scales as interchangeable with semantic differential questions. They are both 5 or 7 point scales, and both have a quality of ranking. The difference is in the types of questions for which they should be used. Likert scales are best used for gauging agreement or disagreement with a statement, and semantic differential questions are more focused on feeling or qualitative measures. These differences are significant because it changes the way you write the question and analyze the results.

For example “On a scale of 1-5, how would you rate our service?” is a neutrally phrased, semantic differential question that could capture how a respondent feels about your service. If you were to try and form this question using a Likert scale, you might phrase it as “I received excellent service” rate on a scale of Strongly Disagree to Strongly Agree. That phrasing is less neutral and can lead the customer towards a different response.

Another important survey technique is to combine open-ended and dichotomous questions. As discussed for quick surveys, it’s great to get an initial response to a dichotomous question like “Yes, I did find everything today” or “No, I am not satisfied with my service.” However, the real information comes from asking an open-ended question after, such as: “Why or Why Not?” or “What were the main factors for your rating?” Dichotomous questions are great if you want to spot check something specific. But open-ended questions are where you receive tangible feedback that can alter the way you operate or your product is designed.

Finally, while conventional wisdom states that open-ended questions provide more value, they also take significantly more time to review and analyze. Open-ended questions may be interpreted incorrectly, due to language, or space constraints. The sentiment of a response is hard to read as well. Misinterpreting a response can lead to aggregating completely different ideas that happen to use similar words into the same action. Some open-ended questions, especially ones like “Is there anything else you would like to share with us?” are great questions with which to end a survey. However, if you aren’t going to invest the time to analyze the data adequately, it’s best to use a close-ended question.

2. Know your audience

There are cultural differences to how surveys are answered. SAP highlights that language and culture can play a significant role in how your surveys are answered. Most notably many European countries rate 10 as low and 1 as high, versus the North American standard which is opposite. Always know with whom you are communicating and how to compare responses.

Another common issue is targeting the questions to a user but sending the survey to the purchaser. In B2B scenarios, there are often different actors involved in a sale. The questions you create must be targeted to the correct roles within an organization, or there will be no response, or worse, a response that skews your actual data.

If you want to reach multiple types of audiences, and have slightly different surveys for each, depending on their role, country or other criteria, Mail merge tools such as MailChimp are excellent at helping segment customers to give different content based on the criteria you specify.

Finally, once your survey questions are created, assess the time commitment for your customers and be honest about it in any communication. As a recipient of a survey, it is annoying to open something labeled as a 'quick survey', and by page 5 or minute 20 you are still wading into questions. If your survey is going to take 20 minutes, that is fine (well, maybe see if you can shorten it a little…), but tell everyone that before they click the link and start to respond. You don’t want to create any hostility while someone is trying to help you.

Survey Tooling

There are a significant number of tools on the market to help with surveys. These tools serve different purposes and have features sets that range from generic question/answer creation and storage to niche surveying techniques. It is important that you fully outline your use cases before deciding on which one(s) to purchase.

On the more generic surveys side, tools such as SurveyMonkey or Typeform will help you structure your survey, brand it, keep it engaging and also help you to compile your analytics. These tools are heavily configurable for most any type of survey. If you have a wide variety of survey needs, tools of this type is the route to go. Most of these tools are focused on the long form feedback surveys, and while some can be embedded directly into your app, that is generally not their primary purpose. You mainly see these types of surveys delivered through a survey button or email link.

Voice of Customer tools such as or Clarabridge and InMoment are specifically made to gather customer feedback. These tools get feedback by being seamlessly integrating into your software product and capturing your customer opinion in real-time without interrupting their workflow. In general, these tools are focused on the 'quick survey' type responses discussed previously. They focus on gathering smaller responses across more customers, more frequently and allowing you to trend, or set up alert s based on sentiment changes within your user base. They often have features to aggregate users based on metadata such as their company or demographics or over certain time periods. If your goal is to gather and trend customer sentiment, measure against targets and create actions based on the feedback (more on actioning feedback later!), this class of tools is a good place to start.

There are several tools that look less like a traditional survey but still collect important feedback. Similar to how large consumer product companies use special glasses, and cameras to measure what colors, shelf locations, and promotions capture the shopper’s eye, software products exist to capture the same data from your website or app. Visual Feedback and User Testing tools, such as Hotjar and UserSnap, embed themselves into your product to measure what elements users click and also allow a user to comment on the locations of an element or confusing workflows. This use case is very specific but can yield powerful results to improve your product’s user experience. If you understand how your users use your product, you can make adjustments that make it easier and therefore more desirable to use.

Finally, you can also use customer forums for feedback. Often these are a marketing department initiative, but they are so much more powerful as a cross-functional endeavor. These forums encourage users to post feedback and ideas and then have discussions about them. These discussions range from bolstering the idea or adding new use cases, to solving the problem through a workaround or directing someone to training. As we will discuss below about actioning feedback, forum post moderation and responses are a must when taking this approach.

With any of the above tool types, there are important things to consider during the selection and evaluation process:

- Delivery channels for surveys

- Any integrations you need to use, including a Customer Relationship Management Tool (CRM)

- Branding Requirements

- Analytics Requirements

- Goals or changes you hope to drive by the feedback

- Cross-functional use cases

If you document the business needs and compare the tools to them, you will have an easier time choosing the one or more solutions that will make you most successful.

One feature that should be considered a must-have is the ability to automatically correlate responses to important metadata that you already have on the customer. Many survey tools have ways to remove unnecessary fields from your customers and make your surveys shorter. This feature allows you to encode unique URLs to send to an individual which contains information you already know. This means that instead of re-requesting known information such as customer name, industry, size, contact details, etc. the form comes pre-filled in, or hidden entirely from the end user. By using this functionality, you can maintain the ability to analyze certain customer segments or demographics, while lessening the burden to the people filling out the survey. Anything to make surveys easier for your respondents should be considered. Remember that submitting feedback is often a favor. If the favor is simpler, it is more likely to be done.

Beyond survey-specific tools, you should also consider Customer Experience Management (CEM) tools. Tools such as Totango and Gainsight advance your ability to take in and action feedback from surveys. While these tools aren’t explicitly focused on surveying, using them has advantages. Built right into your CRM, you can use these tools to generate lists of customers that meet certain criteria, auto-generate tasks based on specific question responses, trend answers against a customer or customer segment and do in-depth analysis that helps you better action the results. If you do own a CEM tool, you also should investigate what purpose-built survey tools integrate well with them to combine the best functions of both.

Maximizing Customer Interactions

Surveys are not the only way to gather feedback. People within your organization interact with customers all the time, and you should be taking advantage of those conversations. Customer-facing teams such as Support, Success & Services are continually receiving feedback. It could come in the form of a cheer or a sigh during a feature explanation, a sarcastic “that was easy” comment during a demo or outright frustration at a process or policy you are enforcing. All of that input needs to be listened to, understood and actioned.

The phrase “this call may be recorded for quality control” is commonplace across the service industry. There is, rightly, a hyper-focus on making sure your customer-facing representatives are delivering the customer experience with which your company wants to be associated. But if you are only analyzing your calls for your agent quality, you are missing one of the single biggest inputs into feedback! The phrase you internally need to think about as part of the assessment is: “this interaction will be monitored for all feedback you provide.” Train your customer-facing teams to listen for key phrases and how t hey can identify feedback in normal conversation. Finally, have a process in place that can be used to collect the details and pass them on to the right team.

Source: Productboard

We get a lot of feedback during live chat conversations with our customers: feature requests, bug reports, suggestions for improving the product, etc. Thanks to the feedback, we are able to see what our existing customers want and what prevents potential customers from switching to Chatra. We take all of that into consideration when we decide what to focus on each sprint. It helps us keep Chatra a convenient and functional live chat tool. Give Chatra a try and see for yourself!

Tools such as Productboard allow you to collect unfiltered or raw feedback which can then be correlated and organized into meaningful product suggestions to be used during a product management planning cycle. A product tool can combine data generated by different teams into one place simply by having those team members forward emails, support tickets, screenshots, or slides into the tool. Once there, it can be categorized and actioned as product changes. Your customer-facing teams will become instrumental to the feedback loop and the product direction.